Video generative models can be regarded as world simulators due to their ability to capture dynamic, continuous changes inherent in real-world environments.

These models integrate high-dimensional information across visual, temporal, spatial, and causal dimensions, enabling predictions of subjects in various status.

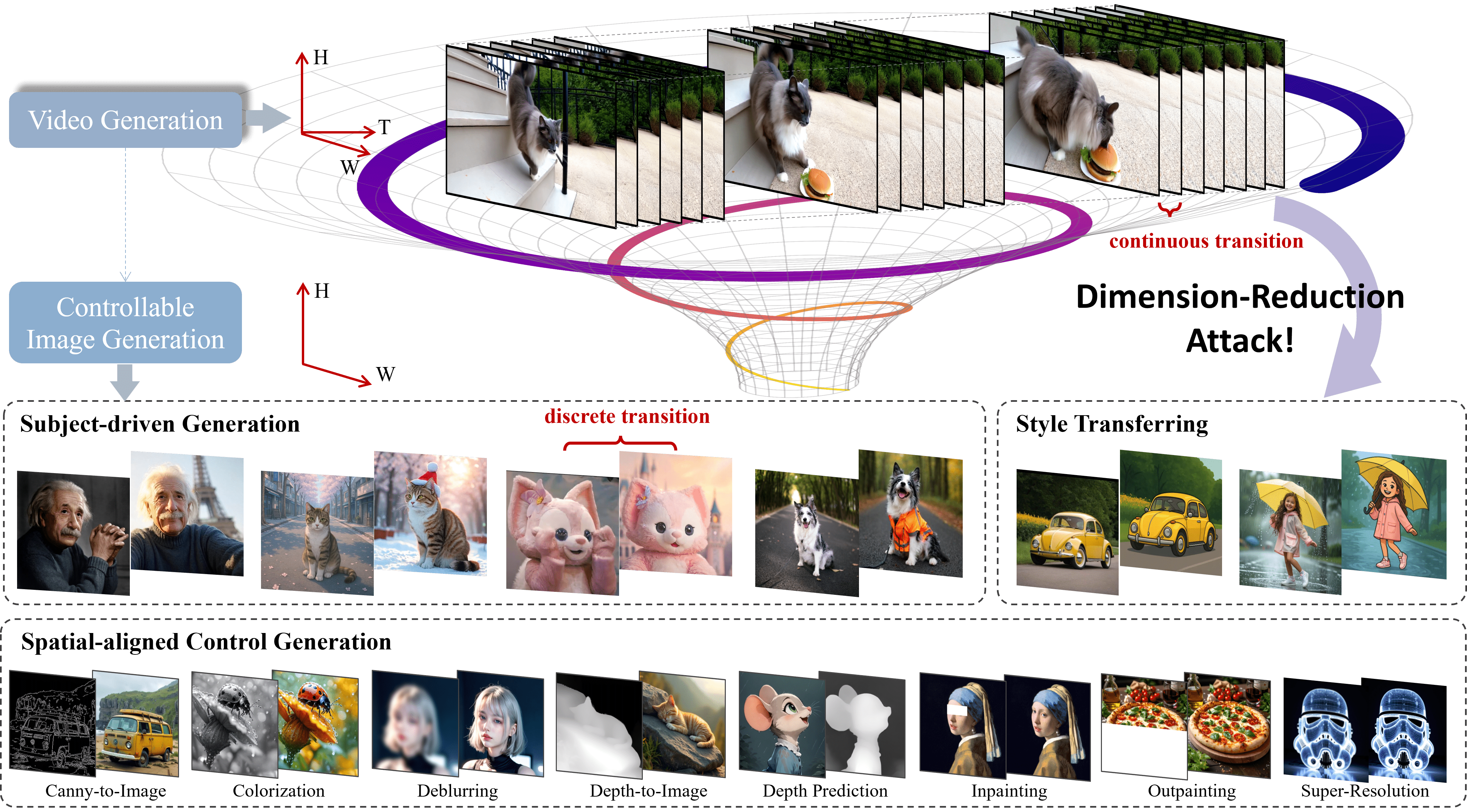

A natural and valuable research direction is to explore whether a fully trained video generative model in high-dimensional space can effectively support lower-dimensional tasks such as controllable image generation.

In this work, we propose a paradigm for video-to-image knowledge compression and task adaptation, termed Dimension-Reduction Attack (DRA-Ctrl), which utilizes the strengths of video models, including long-range context modeling and flatten full-attention, to perform various generation tasks.

Specially, to address the challenging gap between continuous video frames and discrete image generation, we introduce a mixup-based transition strategy that ensures smooth adaptation.

Moreover, we redesign the attention structure with a tailored masking mechanism to better align text prompts with image-level control.

Experiments across diverse image generation tasks, such as subject-driven and spatially conditioned generation, show that repurposed video models outperform those trained directly on images. These results highlight the untapped potential of large-scale video generators for broader visual applications.

DRA-Ctrl provides new insights into reusing resource-intensive video models and lays foundation for future unified generative models across visual modalities.

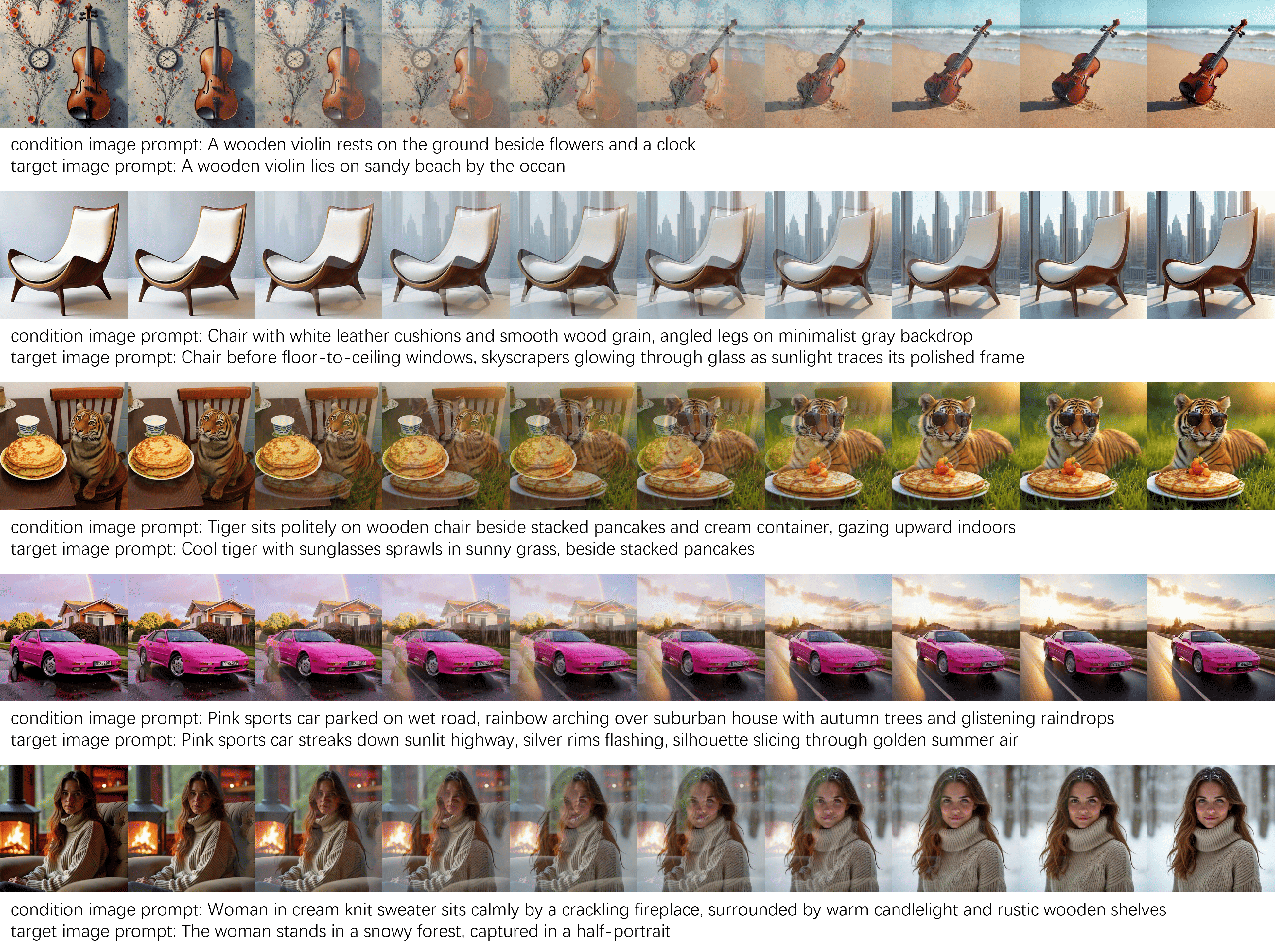

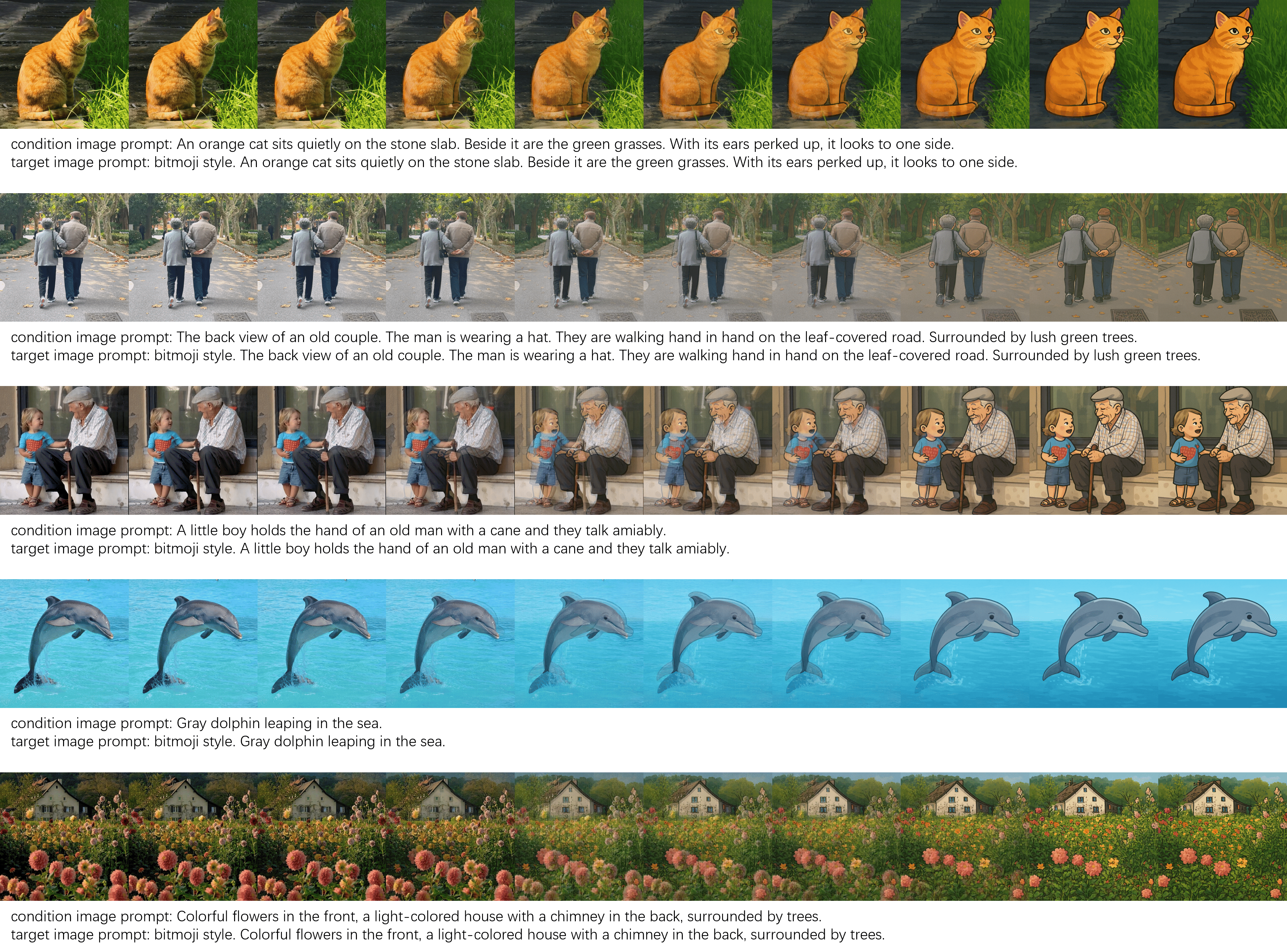

DRA-Ctrl on Multiple Controllable Image Generation Tasks

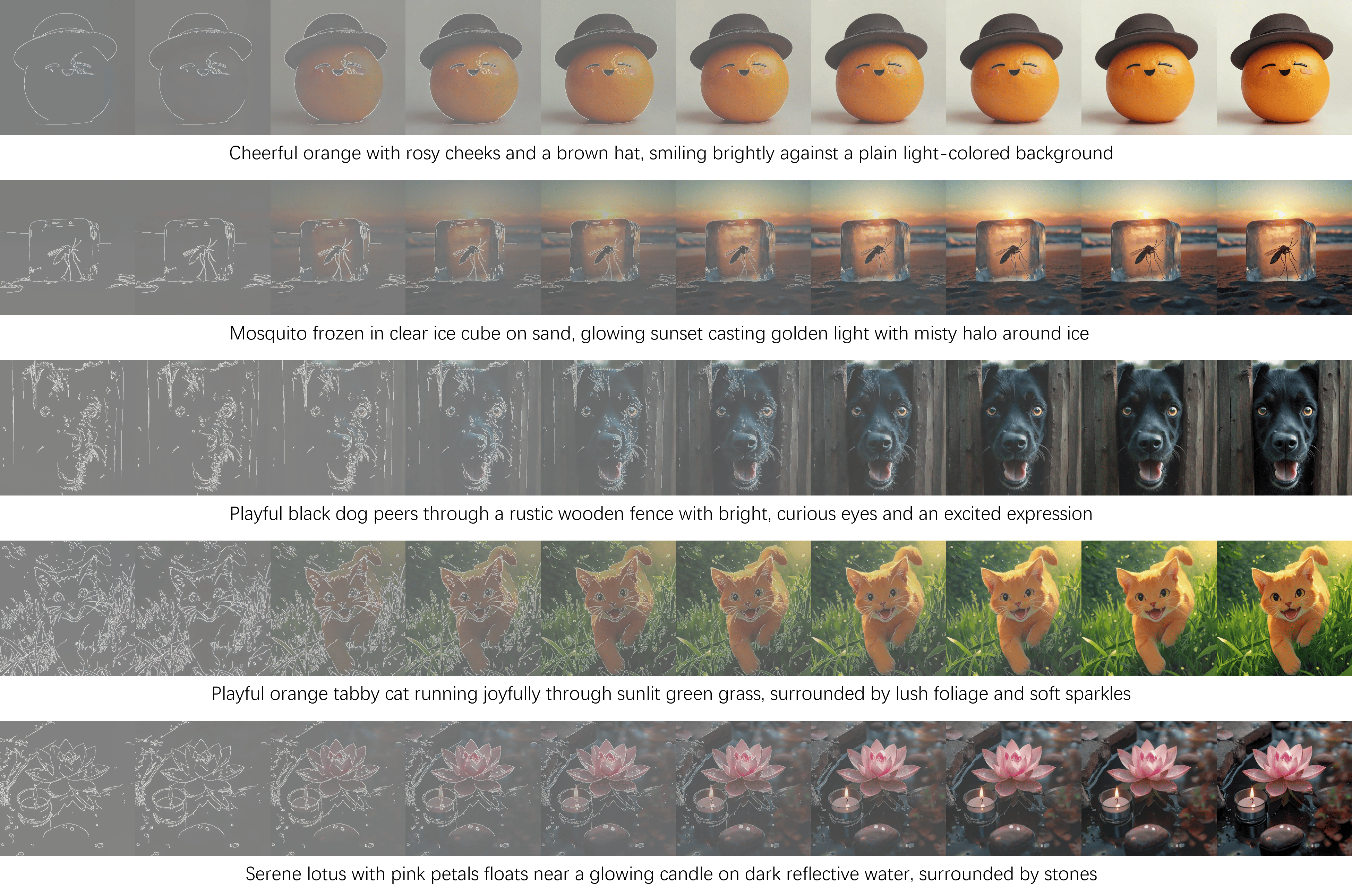

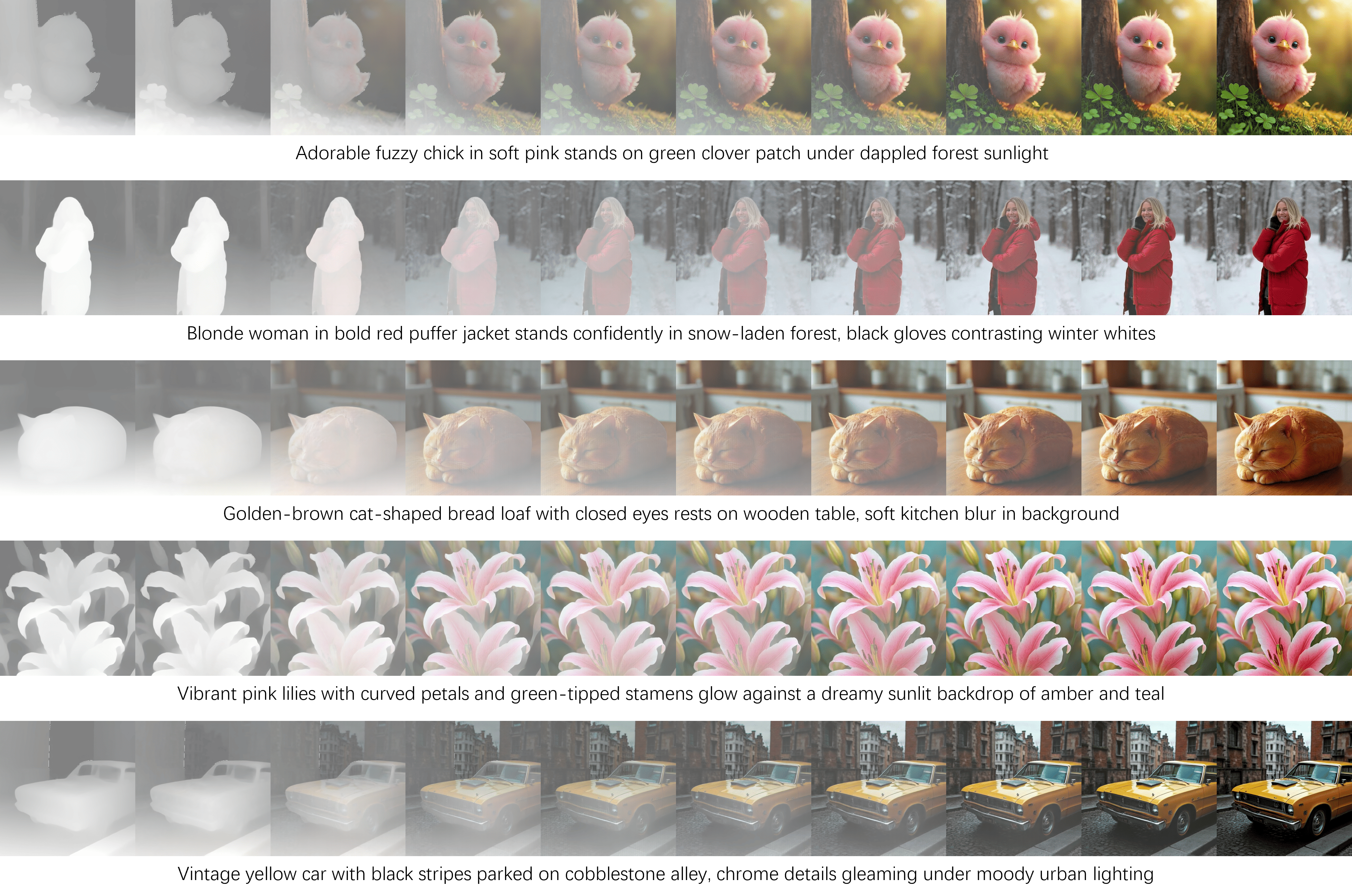

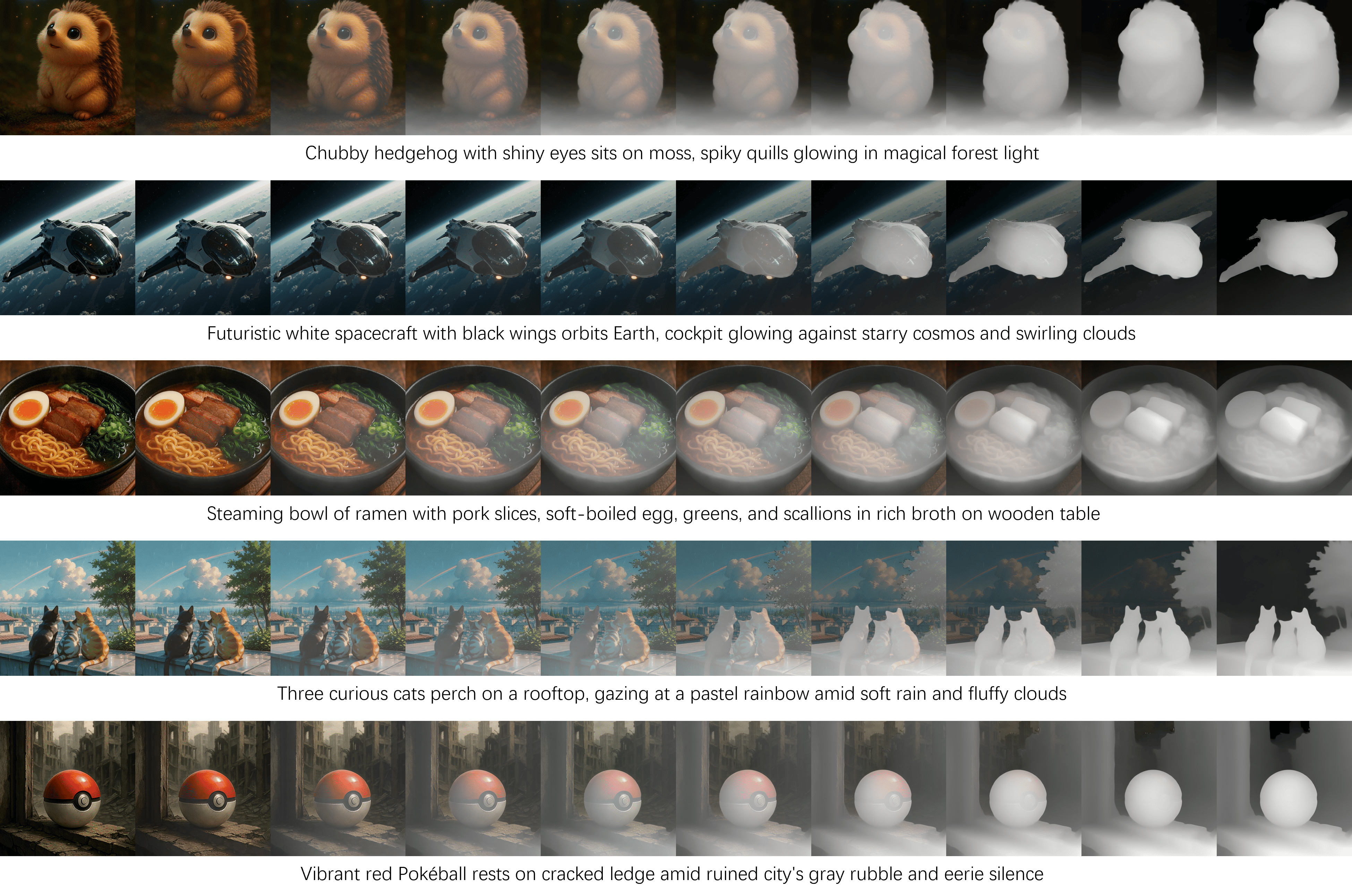

DRA-Ctrl Generation ResultsGiven that video generative models' inherent temporal full-attention and rich dynamics priors, we argue they can be efficiently re-purposed for controllable image generation tasks.

The simplest approach for controllable image generation using video generative models is to treat condition and target images as a two-frame video. During training, the condition image remains noiseless and excluded from loss calculation, while the target image is noise-corrupted and included in loss calculation. During inference, the condition image maintains noiseless to provide complete control signals. However, neither finetuned T2V nor I2V model meets the requirements for subject-driven generation: the T2V model lacks subject consistency, while the I2V model over-preserves similarity to the condition image and exhibits poor prompt adherence.

To successfully adapt smooth-transition-capable video generative models for handling abrupt and discontinuous image transitions, we propose multiple strategies, including mixup-based shot transition strategy, Frame Skip Position Embedding (FSPE), loss reweighting and Attention Masking Strategy.

@misc{cao2025dimensionreductionattackvideogenerative,

title={Dimension-Reduction Attack! Video Generative Models are Experts on Controllable Image Synthesis},

author={Hengyuan Cao and Yutong Feng and Biao Gong and Yijing Tian and Yunhong Lu and Chuang Liu and Bin Wang},

year={2025},

eprint={2505.23325},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2505.23325},

}